7 Advanced Topics

7.1 Disk Images

Most disk images in Apt are stored and distributed in the Frisbee disk image format. They are stored at block level, meaning that, in theory, any filesystem can be used. In practice, Frisbee’s filesystem-aware compression is used, which causes the image snapshotting and installation processes to parse the filesystem and skip free blocks; this provides large performance benefits and space savings. Frisbee has support for filesystems that are variants of the BSD UFS/FFS, Linux ext, and Windows NTFS formats. The disk images created by Frisbee are bit-for-bit identical with the original image, with the caveat that free blocks are skipped and may contain leftover data from previous users.

Disk images in Apt are typically created by starting with one of Apt’s supplied images, customizing the contents, and taking a snapshot of the resulting disk. The snapshotting process reboots the node, as it boots into an MFS to ensure a quiescent disk. If you wish to bring in an image from outside of Apt or create a new one from scratch, please contact us for help; if this is a common request, we may add features to make it easier.

Apt has default disk image for each node type; after a node is freed by one experimenter, it is re-loaded with the default image before being released back into the free pool. As a result, profiles that use the default disk images typically instantiate faster than those that use custom images, as no disk loading occurs.

Frisbee loads disk images using a custom multicast protocol, so loading large numbers of nodes typically does not slow down the instantiation process much.

Images may be referred to in requests in three ways: by URN, by an unqualified name, and by URL. URNs refer to a specific image that may be hosted on any of the Apt-affilated clusters. An unqualified name refers to the version of the image hosted on the cluster on which the experiment is instantiated. If you have large images that Apt cannot store due to space constraints, you may host them yourself on a webserver and put the URL for the image into the profile. Apt will fetch your image on demand, and cache it for some period of time for efficient distribution.

Images in Apt are versioned, and Apt records the provenance of images. That is, when you create an image by snapshotting a disk that was previously loaded with another image, Apt records which image was used as a base for the new image, and stores only the blocks that differ between the two. Image URLs and URNs can contain version numbers, in which case they refer to that specific version of the image, or they may omit the version number, in which case they refer to the latest version of the image at the time an experiment is instantiated.

7.2 Storage Mechanisms

7.2.1 Overview of Storage Mechanisms

Apt offers a convenient way to specify storage resources in a profile. A blockstore, more commonly known as a dataset, is an abstraction of a block-addressable storage container of a specified size. Think of a dataset as a virtual disk that only your experiments can access. The most common way to use a dataset’s capacity is to make a filesystem on it, though that is up to the user.

A dataset may either be local, allocated on a node disk and directly accessed by the OS, or remote, located on a shared storage server and accessible via the experiment network fabric.

Datasets may also be either ephemeral, where the content lasts only as long as its referencing experiment, or persistent, where the lifetime is independent of an experiment and can thus be used across multiple successive experiments. Note that local datasets are always ephemeral, since node disks are re-imaged after every experiment use. (There is a pseudo-dataset type, the image-backed dataset, that can be used to explicitly capture the contents of a local dataset in a reusable way). Remote datasets can be ephemeral or persistent.

Persistent datasets may be either short-term or long-term. This is a policy distinction and not a technical one. The former is intended to allow fixed-duration (e.g., one week) access to larger datasets with fewer administrative hurdles. The latter is intended for longer term (e.g., months to years) ongoing access to size-limited datasets. Long-term datasets remain alive as long as they are being regularly used, but their creation is subject to per-project quotas for total size and might require interaction with Apt staff.

Persistent datasets may also be cloned, giving individual nodes their own mutable copy of a dataset. Currently, these clones are ephemeral, with per-node changes lost at experiment termination. This limits their utility. In the future, we plan to allow clones to be promoted to new persistent datasets, and allowed to replace the dataset they are cloning.

Datasets are not the only form of storage available to users. There is a legacy shared NFS filesystem that allows convenient but limited concurrent sharing between nodes within and across experiments.

All local storage is ephemeral. The contents of node disks will be lost when an experiment is terminated. You are responsible for saving data from local disks.

Most of these storage mechanisms are intra-cluster only. For example, a persistent dataset at Utah cannot be directly used by an experiment running on Clemson nodes. A new dataset would have to be created at Clemson, and the content explicitly copied over by the user from the Utah dataset. Likewise, the shared NFS filesystem at one cluster is independent of the shared filesystem at another cluster. Inter-cluster sharing mechanisms, e.g., scp or rsync, must be provided by the user at this time. The one exception is the Image-backed Dataset described below, which can be moved between clusters.

All of these storage mechanisms are subject to failure. Apt is not a storage provider. It is ultimately up to the users to make sure that important data artifacts are preserved. Node disks in particular are prone to failure; we do not configure them in any redundant way. Infrastructure services such as the iSCSI storage servers and the NFS servers have reasonable protection against hardware failures (e.g., RAID or ZFS), but most clusters do not have off-site backup of user data. If your data is important, back it up!

The following sections more concretely describe the storage resources and workflows available to Apt users.

7.2.2 Node-Local Storage

All nodes in Apt have at least 100GB of local storage, in the form of one or more SSDs (SATA or NVMe), spinning disks, or both. See the Hardware section for details of the local storage available on specific node types at each cluster.

By default, all Apt OS images have a 16GB system partition (partition 1) on the boot disk. The OS installs typically take up 1-3GBs of this space, leaving 10+GB of conveniently available storage (e.g., in /tmp). While this space is sufficient for many uses, there are times when more is desired.

7.2.2.1 Specifying Storage in a Profile – Local Datasets

If you know you will need additional storage before you create an experiment, then you can specify the storage needs in your profile by configuring one or more local datasets. See the Local Dataset example below. These datasets are automatically created by the Apt node configuration scripts at experiment instantiation. If you specify a mountpoint, the system will automatically create a filesystem in the dataset on first boot, and mount it on every boot.

Local datasets are implemented on Linux using LVM. When one or more datasets are required on a Linux node, the available storage on all drives is combined into an LVM volume group and individual datasets are allocated (striped across all involved disks) as logical volumes from that.

At this time, there is no way to specify redundancy on a local dataset. The LVM volume group and its corresponding logical volumes are effectively RAID0.

7.2.2.2 Allocating Storage in a Running Experiment

If you are in a situation where an existing experiment needs more space (i.e., you did not specify a dataset in the profile), we provide a script that can be run to quickly create a new filesystem using the remaining space on the system (boot) disk:

sudo /usr/local/etc/emulab/mkextrafs.pl /mydata

This will provide you with anywhere from 90GB to 1TB, depending on the node type you are on.

It is also possible to use Linux tools to create your own partitions, filesystems, LVM, ZFS, RAID, or other storage configurations. You can also resize the OS partition to include all storage on the boot disk. These techniques are strongly discouraged, as the resulting configurations will only last til the experiment ends and may interfere with the Apt imaging tools.

7.2.2.3 Persisting Local Data

Since most Apt nodes are directly accessible from the Internet, you can use your favorite tools (e.g., tar/scp or rsync) to offload your data from a node to your home before the experiment, terminates. You can copy data from local disks to the shared NFS filesystem, but this practice is strongly discouraged.

You can also make your data part of a custom OS image. By placing it in a directory like /data, it will be included in any snapshot you make. Do not put it in your home directory on the node, or transient locations like /tmp or /var/tmp as those are not captured in snapshots. This practice of “baking” data into an OS image is generally not a good idea as it ties the data to a specific OS and can result in very large images which might put you over disk quota.

Apt does provide one way to persist local disk data in a format that can be loaded on future experiment nodes independent of the OS image used and can be exported to other clusters. These Image-backed Datasets are described next.

7.2.3 Image-backed Datasets

An image-backed dataset is a snapshot of a local dataset created using a Apt Disk Image. Since disk images can be used across clusters, an image-backed dataset is a convenient way of both persisting data from a node and enabling it to be used in different experiments on different clusters.

An image-backed dataset can be updated from any node on which it is installed by taking a snapshot as you would an OS image. However, image-backed datasets are not versioned, there is always only one version of the dataset across all clusters. Note also that creating a new version of the dataset does not affect other currently installed copies of the dataset on other nodes. While the portal will not allow simultaneous snapshots of the same dataset, it is ultimately up to the user to ensure consistency across uses of the dataset.

An image-backed dataset can be protected as readable (install-able) by just members of your project or by anyone. Independently, they can be protected as writable (update-able) either by just yourself or by members of your project.

Examples of creation, use and updating of an image-backed dataset are shown in the Storage Examples section.

Note that the size of image-backed datasets is limited by individual clusters. Typically, this limit is around 20GB of compressed data per image, so these datasets are not well suited to very large datasets.

Note also that image-backed datasets, like all Apt images, are in the custom frisbee format and cannot be easily examined or used outside of Apt.

7.2.4 Remote Datasets

Remote datasets are network accessible storage volumes. Specifically, they are hosted on per-cluster, infrastructure-provided storage servers and exposed to experiment nodes via the iSCSI protocol on the experiment network fabric. Most, but not all, cluster in Apt have at least one storage server. See the Hardware section for details on available remote storage at each cluster. A remote dataset appears on an experiment node as disk device, typically /dev/sdb or /dev/sdc, depending on how many local disks a node has. Multiple datasets will result in multiple disk devices.

Remote datasets are intended to provide access to a larger quantity of storage than what is available locally on most nodes. While the total space available on Apt storage servers is modest (20-100TB), it does at least allow for multi-TB datasets on nodes. Because these datasets are accessed as part of an experiment’s private network topology, the content is more secure and there is less impact on other experiments relative to sharing mechanisms that use the control network (e.g., the shared NFS filesystem).

An ephemeral remote dataset allows node-private storage larger than what is available on the local disks. They are created at experiment instantiation and destroyed at experiment termination.

Persistent remote datasets provide efficient access to remote storage that persists across experiment instantiations. Because the data resides remotely and does not need to be copied in at experiment startup and copied off at termination, it potentially allows large-data experiment instances to run for shorter lengths of time per instantiation. Simultaneous access to remote datasets by multiple nodes within and across experiments is also possible, with certain limitations. Read-only sharing of a dataset, or use of per-node read-write clones of a dataset, are always safe. Simultaneous read-write sharing of a remote dataset is possible, but almost certainly not what you want. Unless the OSes on all sharing nodes are coordinating their writes to the dataset, you will almost certainly wind up with a corrupted dataset. If you need a shared filesystem on persistent storage, see the example Shared Filesystem profile.

7.2.5 NFS Shared Filesystems

The original Emulab mechanism for sharing and persistence was a set of shared NFS filesystems hosted by an infrastructure server and accessed over the control network. This mechanism is still available in Apt, but only for the /proj hierarchy. While NFS provides an extremely easy to understand and use method for sharing and persisting data, it is extremely inefficient for some workloads such as those that are metadata intensive (e.g., creating lots of files when unpacking a tarball) or bandwidth intensive (e.g., simultaneous reading or writing of large files). This can place considerable load on a central shared resource and adversely affect other experiments and even the Apt control framework. For this reason, NFS is strongly discouraged for real-time data capture or logging.

7.2.6 Storage Type Summary (TL;DR)

The following table attempts to summary the various storage mechanisms and their attributes. Persistent indicates whether modified data remains after an experiment is terminated. Multi-node indicates if and how the data can be used by multiple nodes simultaneously, Capacity is the rough size of the storage available for an instance of the mechanism, Throughput is a rough estimate of the best-case (sequential) throughput of the storage mechanism, and Use Cases describes when the mechanism is appropriate and notes other characteristics.

Method |

| Persistent |

| Multi-node |

| Capacity |

| Throughput |

| Use Cases |

Per-node local root filesystem |

| No |

| No |

| ~10GB |

| 100-300MB/sec |

| Sufficient for the majority of experiments; no explicit setup required |

Per-node extra filesystem |

| No |

| No |

| 90GB to 1TB |

| 100-200MB/sec |

| When more than 10GB is needed; setup after experiment start; user must choose an appropriate node type; user must explicitly create |

Per-node local blockstore |

| No |

| No |

| 90GB to 40TB |

| 100-1000MB/sec |

| When up to 40TB is needed; specified by size in profile, system picks the node type; automatically setup; may stripe on multiple disks |

Image-backed dataset |

| Yes |

| Yes, all nodes get a copy |

| 10-20GB |

| 100-1000MB/sec |

| When persistence and local disk speed is needed, but not large capacity; must be snapshotted to save modifications |

Infrastruture-provided shared NFS filesystem |

| Yes |

| Yes, all nodes read-write share |

| up to 100GB |

| 20-100MB/sec |

| When true sharing is needed, use is discouraged for many-node or high IO-op experiments |

Ephemeral remote datasets |

| No |

| No |

| up to 10TB |

| 50-200MB/sec |

| When large capacity but not high throughput is needed on a single node |

Persistent remote datasets |

| Yes |

| Yes, all nodes can read-write share |

| up to 10TB |

| 50-200MB/sec |

| When persistence and large capacity but not high throughput are needed; read-write sharing between nodes requires @emph{extreme care}. |

Persistent remote dataset clones |

| No |

| Yes, all nodes get a copy |

| up to 10TB |

| 50-200MB/sec |

| When large scale sharing and large capacity but not persistence or high throughput are needed; clones are read-write but changes are not persistent |

7.2.7 Example Storage Profiles

7.2.7.1 Creating a Node-local Dataset

If you know in advance that you will need more that the ~10GB available on the root filesystem of any OS image, you can create a local dataset with a specified size as demonstrated in this profile:

"""This profile demonstrates how to add some extra *local* disk space on your node. In general nodes have much more disk space then what you see with `df` when you log in. That extra space is in unallocated partitions or additional disk drives. An *ephemeral blockstore* is how you ask for some of that space to be allocated and mounted as a **temporary** filesystem (temporary means it will be lost when you terminate your experiment). Instructions: Log into your node, your **temporary** file system in mounted at `/mydata`. """ # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as rspec # Import the emulab extensions library. import geni.rspec.emulab # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Allocate a node and ask for a 30GB file system mounted at /mydata node = request.RawPC("node") node.disk_image = "urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU16-64-STD" bs = node.Blockstore("bs", "/mydata") bs.size = "30GB" # Print the RSpec to the enclosing page. portal.context.printRequestRSpec() Open this profile on Apt"""This profile demonstrates how to add some extra *local* disk space on your node. In general nodes have much more disk space then what you see with `df` when you log in. That extra space is in unallocated partitions or additional disk drives. An *ephemeral blockstore* is how you ask for some of that space to be allocated and mounted as a **temporary** filesystem (temporary means it will be lost when you terminate your experiment). Instructions: Log into your node, your **temporary** file system in mounted at `/mydata`. """ # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as rspec # Import the emulab extensions library. import geni.rspec.emulab # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Allocate a node and ask for a 30GB file system mounted at /mydata node = request.RawPC("node") node.disk_image = "urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU16-64-STD" bs = node.Blockstore("bs", "/mydata") bs.size = "30GB" # Print the RSpec to the enclosing page. portal.context.printRequestRSpec()

Instantiating this profile will give you a single node with a dataset containing an empty filesystem mounted at /mydata.

7.2.7.2 Creating an Image-backed Dataset from a Node-local Dataset

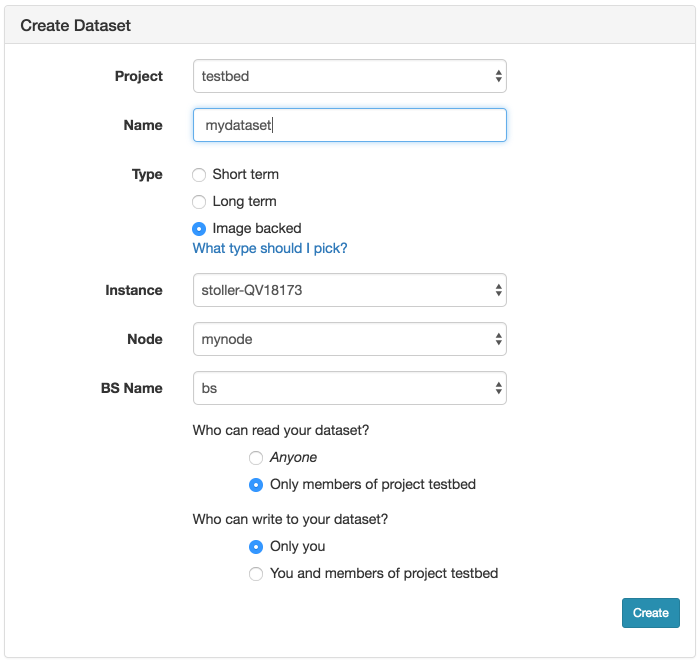

If you have created a node-local dataset as described above and populated it, then you can persist it by creating a new image-backed dataset. Click on the "Create Dataset" option in the Actions menu. This will bring up the form to create a new dataset:

As shown, choose a name for your dataset and optionally the project the dataset should be associated with. Be sure to select Image Backed for the type. Then choose the experiment (Instance), which node in the experiment (Node), and which local dataset on the node (BS Name). The dataset name will be the first argument to the node.Blockstore method invocation in the profile used to create the local dataset (bs in the example above).

Before clicking the Create button, make sure that you have no processes running on the node and accessing the mounted filesystem. This might include processes logging to a file in that filesystem or your interactive shell if you are cd’ed to that directory. If you do not do this, the image creation will fail when it tries to unmount the filesystem to ensure a consistent snapshot.

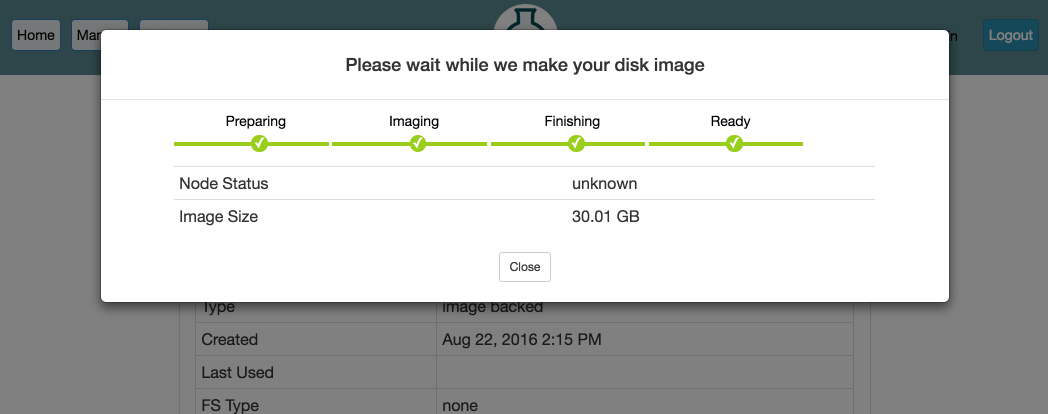

After clicking Create, the process can take several minutes or longer, depending on the size of the file system. Progress will be displayed on the page:

When the progress bar reaches the Ready stage, your new dataset is ready! It will now show up in your Storage drop-down under My Datasets and can be used in new experiments.

7.2.7.3 Using and Updating an Image-backed Dataset

To use an existing image-backed dataset, you will need to reference it in your profile, as demonstrated in:

"""An example of an image backed dataset. The dataset name and mountpoint can be customized when you instantiate the profile. Instructions: Log into your node, your dataset filesystem is in the directory you specified during profile instantiation.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the emulab extensions library. import geni.rspec.emulab # Create a portal context, needed to defined parameters pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() pc.defineParameter("DATASET", "URN of your image-backed dataset", portal.ParameterType.STRING, "urn:publicid:IDN+emulab.net:testbed+imdataset+pgimdat") pc.defineParameter("MPOINT", "Mountpoint for file system", portal.ParameterType.STRING, "/mydata") params = pc.bindParameters() node = request.RawPC("mynode") node.disk_image = 'urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU16-64-STD' bs = node.Blockstore("bs", params.MPOINT) bs.dataset = params.DATASET pc.printRequestRSpec(request)Open this profile on Apt"""An example of an image backed dataset. The dataset name and mountpoint can be customized when you instantiate the profile. Instructions: Log into your node, your dataset filesystem is in the directory you specified during profile instantiation.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the emulab extensions library. import geni.rspec.emulab # Create a portal context, needed to defined parameters pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() pc.defineParameter("DATASET", "URN of your image-backed dataset", portal.ParameterType.STRING, "urn:publicid:IDN+emulab.net:testbed+imdataset+pgimdat") pc.defineParameter("MPOINT", "Mountpoint for file system", portal.ParameterType.STRING, "/mydata") params = pc.bindParameters() node = request.RawPC("mynode") node.disk_image = 'urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU16-64-STD' bs = node.Blockstore("bs", params.MPOINT) bs.dataset = params.DATASET pc.printRequestRSpec(request)

This profile takes the dataset URN to use as a parameter during instantiation. You can find the URN for your dataset on the information page for the dataset. From the Storage drop-down, click on My Datasets, find the name of your dataset in the list, and click on it.

Once instantiated, the dataset will be accessible under /mydata (or whatever mountpoint you specified).

If you make changes and want to preserve them, you can update your dataset by using the Modify button on the My Datasets page for the dataset in question.

7.2.7.4 Creating a Remote Dataset

Creating a remote dataset is very similar to the process just described for creating an image-backed dataset. Click on the "Create Dataset" option in the Storage drop-down menu. This will bring up the form to create a new dataset:

Screenshot TBD

Choose a name for your dataset and optionally the project the dataset should be associated with.

Select Short term or Long term as the Type depending on your needs (click on “Which type should I pick” for more info).

Pick a Size, keeping in mind that sizes in excess of 1TB are likely to require administrative approval.

Pick the Cluster at which the dataset will be created. Recall that remote datasets are specific to a cluster and can only be used by nodes at that cluster.

If you selected a short-term dataset, you will need to fill in the expiration date (Expires), again keeping in mind that dates more than 1-2 weeks in the future will require administrative approval.

Pick the initial filesystem type you would like created on the dataset. Almost certainly you will want to use the default ext4. It is not necessary to create a filesystem (choose “none”) if you want to use the dataset as a raw disk or if you want to create your own filesystem on it when you first use it. Note however, if you do not choose a filesystem now, then you cannot set a mountpoint when you first use the dataset.

Finally, select the read and write permissions for the dataset.

After clicking Create, you may get a message informing you Your dataset needs to be approved! and giving you a reason why. If this is the case, your dataset will be shown as “unapproved” and you can either let it go and see if it is approved by Apt administrators, or you can Delete and try again with different parameters. (Note that Modify will only allow you to change the permission settings and cannot be used to alter the size or duration of the dataset.) The creation process can take several minutes or longer, depending on whether you specified a filesystem and what its size and type are.

Once it shows up in your My Datasets list as valid, you can use it in new experiments.

7.2.7.5 Using a Remote Dataset on a Single Node

Once you have created a remote dataset, you can make use of it in experiments. In many situations, you may only need to use the dataset on a single node. This is certainly the case after you have just created the dataset and need to populate it. This following profile demonstrates how to use a remote dataset:

"""This profile demonstrates how to use a remote dataset on your node, either a long term dataset or a short term dataset, created via the Portal. Instructions: Log into your node, your dataset file system in mounted at `/mydata`. """ # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as rspec # Import the emulab extensions library. import geni.rspec.emulab # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Add a node to the request. node = request.RawPC("node") # We need a link to talk to the remote file system, so make an interface. iface = node.addInterface() # The remote file system is represented by special node. fsnode = request.RemoteBlockstore("fsnode", "/mydata") # This URN is displayed in the web interfaace for your dataset. fsnode.dataset = "urn:publicid:IDN+emulab.net:portalprofiles+ltdataset+DemoDataset" # # The "rwclone" attribute allows you to map a writable copy of the # indicated SAN-based dataset. In this way, multiple nodes can map # the same dataset simultaneously. In many situations, this is more # useful than a "readonly" mapping. For example, a dataset # containing a Linux source tree could be mapped into multiple # nodes, each of which could do its own independent, # non-conflicting configure and build in their respective copies. # Currently, rwclones are "ephemeral" in that any changes made are # lost when the experiment mapping the clone is terminated. # #fsnode.rwclone = True # # The "readonly" attribute, like the rwclone attribute, allows you to # map a dataset onto multiple nodes simultaneously. But with readonly, # those mappings will only allow read access (duh!) and any filesystem # (/mydata in this example) will thus be mounted read-only. Currently, # readonly mappings are implemented as clones that are exported # allowing just read access, so there are minimal efficiency reasons to # use a readonly mapping rather than a clone. The main reason to use a # readonly mapping is to avoid a situation in which you forget that # changes to a clone dataset are ephemeral, and then lose some # important changes when you terminate the experiment. # #fsnode.readonly = True # Now we add the link between the node and the special node fslink = request.Link("fslink") fslink.addInterface(iface) fslink.addInterface(fsnode.interface) # Special attributes for this link that we must use. fslink.best_effort = True fslink.vlan_tagging = True # Print the RSpec to the enclosing page. portal.context.printRequestRSpec()Open this profile on Apt"""This profile demonstrates how to use a remote dataset on your node, either a long term dataset or a short term dataset, created via the Portal. Instructions: Log into your node, your dataset file system in mounted at `/mydata`. """ # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as rspec # Import the emulab extensions library. import geni.rspec.emulab # Create a Request object to start building the RSpec. request = portal.context.makeRequestRSpec() # Add a node to the request. node = request.RawPC("node") # We need a link to talk to the remote file system, so make an interface. iface = node.addInterface() # The remote file system is represented by special node. fsnode = request.RemoteBlockstore("fsnode", "/mydata") # This URN is displayed in the web interfaace for your dataset. fsnode.dataset = "urn:publicid:IDN+emulab.net:portalprofiles+ltdataset+DemoDataset" # # The "rwclone" attribute allows you to map a writable copy of the # indicated SAN-based dataset. In this way, multiple nodes can map # the same dataset simultaneously. In many situations, this is more # useful than a "readonly" mapping. For example, a dataset # containing a Linux source tree could be mapped into multiple # nodes, each of which could do its own independent, # non-conflicting configure and build in their respective copies. # Currently, rwclones are "ephemeral" in that any changes made are # lost when the experiment mapping the clone is terminated. # #fsnode.rwclone = True # # The "readonly" attribute, like the rwclone attribute, allows you to # map a dataset onto multiple nodes simultaneously. But with readonly, # those mappings will only allow read access (duh!) and any filesystem # (/mydata in this example) will thus be mounted read-only. Currently, # readonly mappings are implemented as clones that are exported # allowing just read access, so there are minimal efficiency reasons to # use a readonly mapping rather than a clone. The main reason to use a # readonly mapping is to avoid a situation in which you forget that # changes to a clone dataset are ephemeral, and then lose some # important changes when you terminate the experiment. # #fsnode.readonly = True # Now we add the link between the node and the special node fslink = request.Link("fslink") fslink.addInterface(iface) fslink.addInterface(fsnode.interface) # Special attributes for this link that we must use. fslink.best_effort = True fslink.vlan_tagging = True # Print the RSpec to the enclosing page. portal.context.printRequestRSpec()

You can find the URN for your dataset on the information page for the dataset. From the Storage drop-down, click on My Datasets, find the name of your dataset in the list, and click on it.

Note the “fslink” settings best_effort and vlan_tagging. These should always be set since some node types have only a single experimental interface. Since the remote dataset uses a network link and your experiment topology might also include a LAN with multiple nodes (see the following examples for multiple nodes), both uses will need to share the physical interface.

7.2.7.6 Using a Remote Dataset on Multiple Nodes via a Shared Filesystem

You cannot simply create a filesystem in a persistent remote dataset and directly share that among nodes in an experiment. If you want to share a standard Linux filesystem among nodes in an experiment, you can instead have one node in your experiment map the dataset read-write and have it act as an NFS server, exporting the dataset filesystem to all other nodes in the experiment via NFS on a shared LAN. This profile configures such an experiment with a variable number of client nodes:

"""This profile sets up a simple NFS server and a network of clients. The NFS server uses a long term dataset that is persistent across experiments. In order to use this profile, you will need to create your own dataset and use that instead of the demonstration dataset below. If you do not need persistant storage, we have another profile that uses temporary storage (removed when your experiment ends) that you can use. Instructions: Click on any node in the topology and choose the `shell` menu item. Your shared NFS directory is mounted at `/nfs` on all nodes.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the Emulab specific extensions. import geni.rspec.emulab as emulab # Create a portal context. pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() # Only Ubuntu images supported. imageList = [ ('urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU18-64-STD', 'UBUNTU 18.04'), ('urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU16-64-STD', 'UBUNTU 16.04'), ('urn:publicid:IDN+emulab.net+image+emulab-ops//CENTOS7-64-STD', 'CENTOS 7'), ] # Do not change these unless you change the setup scripts too. nfsServerName = "nfs" nfsLanName = "nfsLan" nfsDirectory = "/nfs" # Number of NFS clients (there is always a server) pc.defineParameter("clientCount", "Number of NFS clients", portal.ParameterType.INTEGER, 2) pc.defineParameter("osImage", "Select OS image", portal.ParameterType.IMAGE, imageList[2], imageList) # Always need this when using parameters params = pc.bindParameters() # The NFS network. All these options are required. nfsLan = request.LAN(nfsLanName) nfsLan.best_effort = True nfsLan.vlan_tagging = True nfsLan.link_multiplexing = True # The NFS server. nfsServer = request.RawPC(nfsServerName) nfsServer.disk_image = params.osImage # Attach server to lan. nfsLan.addInterface(nfsServer.addInterface()) # Initialization script for the server nfsServer.addService(pg.Execute(shell="sh", command="sudo /bin/bash /local/repository/nfs-server.sh")) # Special node that represents the ISCSI device where the dataset resides dsnode = request.RemoteBlockstore("dsnode", nfsDirectory) dsnode.dataset = "urn:publicid:IDN+emulab.net:portalprofiles+ltdataset+DemoDataset" # Link between the nfsServer and the ISCSI device that holds the dataset dslink = request.Link("dslink") dslink.addInterface(dsnode.interface) dslink.addInterface(nfsServer.addInterface()) # Special attributes for this link that we must use. dslink.best_effort = True dslink.vlan_tagging = True dslink.link_multiplexing = True # The NFS clients, also attached to the NFS lan. for i in range(1, params.clientCount+1): node = request.RawPC("node%d" % i) node.disk_image = params.osImage nfsLan.addInterface(node.addInterface()) # Initialization script for the clients node.addService(pg.Execute(shell="sh", command="sudo /bin/bash /local/repository/nfs-client.sh")) pass # Print the RSpec to the enclosing page. pc.printRequestRSpec(request) """This profile sets up a simple NFS server and a network of clients. The NFS server uses a long term dataset that is persistent across experiments. In order to use this profile, you will need to create your own dataset and use that instead of the demonstration dataset below. If you do not need persistant storage, we have another profile that uses temporary storage (removed when your experiment ends) that you can use. Instructions: Click on any node in the topology and choose the `shell` menu item. Your shared NFS directory is mounted at `/nfs` on all nodes.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the Emulab specific extensions. import geni.rspec.emulab as emulab # Create a portal context. pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() # Only Ubuntu images supported. imageList = [ ('urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU18-64-STD', 'UBUNTU 18.04'), ('urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU16-64-STD', 'UBUNTU 16.04'), ('urn:publicid:IDN+emulab.net+image+emulab-ops//CENTOS7-64-STD', 'CENTOS 7'), ] # Do not change these unless you change the setup scripts too. nfsServerName = "nfs" nfsLanName = "nfsLan" nfsDirectory = "/nfs" # Number of NFS clients (there is always a server) pc.defineParameter("clientCount", "Number of NFS clients", portal.ParameterType.INTEGER, 2) pc.defineParameter("osImage", "Select OS image", portal.ParameterType.IMAGE, imageList[2], imageList) # Always need this when using parameters params = pc.bindParameters() # The NFS network. All these options are required. nfsLan = request.LAN(nfsLanName) nfsLan.best_effort = True nfsLan.vlan_tagging = True nfsLan.link_multiplexing = True # The NFS server. nfsServer = request.RawPC(nfsServerName) nfsServer.disk_image = params.osImage # Attach server to lan. nfsLan.addInterface(nfsServer.addInterface()) # Initialization script for the server nfsServer.addService(pg.Execute(shell="sh", command="sudo /bin/bash /local/repository/nfs-server.sh")) # Special node that represents the ISCSI device where the dataset resides dsnode = request.RemoteBlockstore("dsnode", nfsDirectory) dsnode.dataset = "urn:publicid:IDN+emulab.net:portalprofiles+ltdataset+DemoDataset" # Link between the nfsServer and the ISCSI device that holds the dataset dslink = request.Link("dslink") dslink.addInterface(dsnode.interface) dslink.addInterface(nfsServer.addInterface()) # Special attributes for this link that we must use. dslink.best_effort = True dslink.vlan_tagging = True dslink.link_multiplexing = True # The NFS clients, also attached to the NFS lan. for i in range(1, params.clientCount+1): node = request.RawPC("node%d" % i) node.disk_image = params.osImage nfsLan.addInterface(node.addInterface()) # Initialization script for the clients node.addService(pg.Execute(shell="sh", command="sudo /bin/bash /local/repository/nfs-client.sh")) pass # Print the RSpec to the enclosing page. pc.printRequestRSpec(request)

7.2.7.7 Using a Remote Dataset on Multiple Nodes via Clones

TBD.

7.3 RSpecs

The resources (nodes, links, etc.) that define a profile are expressed in the

RSpec

format from the GENI project. In general, RSpec should be thought of as a

sort of “assembly language”—

7.4 Public IP Access

Apt treats publicly-routable IP addresses as an allocatable resource.

By default, all physical hosts are given a public IP address. This IP address is determined by the host, rather than the experiment. There are two DNS names that point to this public address: a static one that is the node’s permanent hostname (such as pcXX.<cluster>.net), and a dynamic one that is assigned based on the experiment; this one may look like <vname>.<exp>.<proj>.<cluster>.net, where <vname> is the name assigned in the request RSpec, <eid> is the name assigned to the experiment, and proj is the project that the experiment belongs to. This name is predictable regardless of the physical nodes assigned.

By default, virtual machines are not given public IP addresses; basic remote access is provided through an ssh server running on a non-standard port, using the physical host’s IP address. This port can be discovered through the manifest of an instantiated experiment, or on the “list view” of the experiment page. If a public IP address is required for a virtual machine (for example, to host a webserver on it), a public address can be requested on a per-VM basis. If using the Jacks GUI, each VM has a checkbox to request a public address. If using python scripts and geni-lib, setting the routable_control_ip property of a node accomplishes the same effect. Different clusters will have different numbers of public addresses available for allocation in this manner.

7.4.1 Dynamic Public IP Addresses

In some cases, users would like to create their own virtual machines, and would like to give them public IP addresses. We also allow profiles to request a pool of dynamic addresses; VMs brought up by the user can then run DHCP to be assigned one of these addresses.

Profiles using python scripts and geni-lib can request dynamic IP address pools by constructing an AddressPool object (defined in the geni.rspec.igext module), as in the following example:

# Request a pool of 3 dynamic IP addresses pool = AddressPool( "poolname", 3 ) rspec.addResource( pool ) # Request a pool of 3 dynamic IP addresses pool = AddressPool( "poolname", 3 ) rspec.addResource( pool )

The addresses assigned to the pool are found in the experiment manifest.

7.5 Markdown

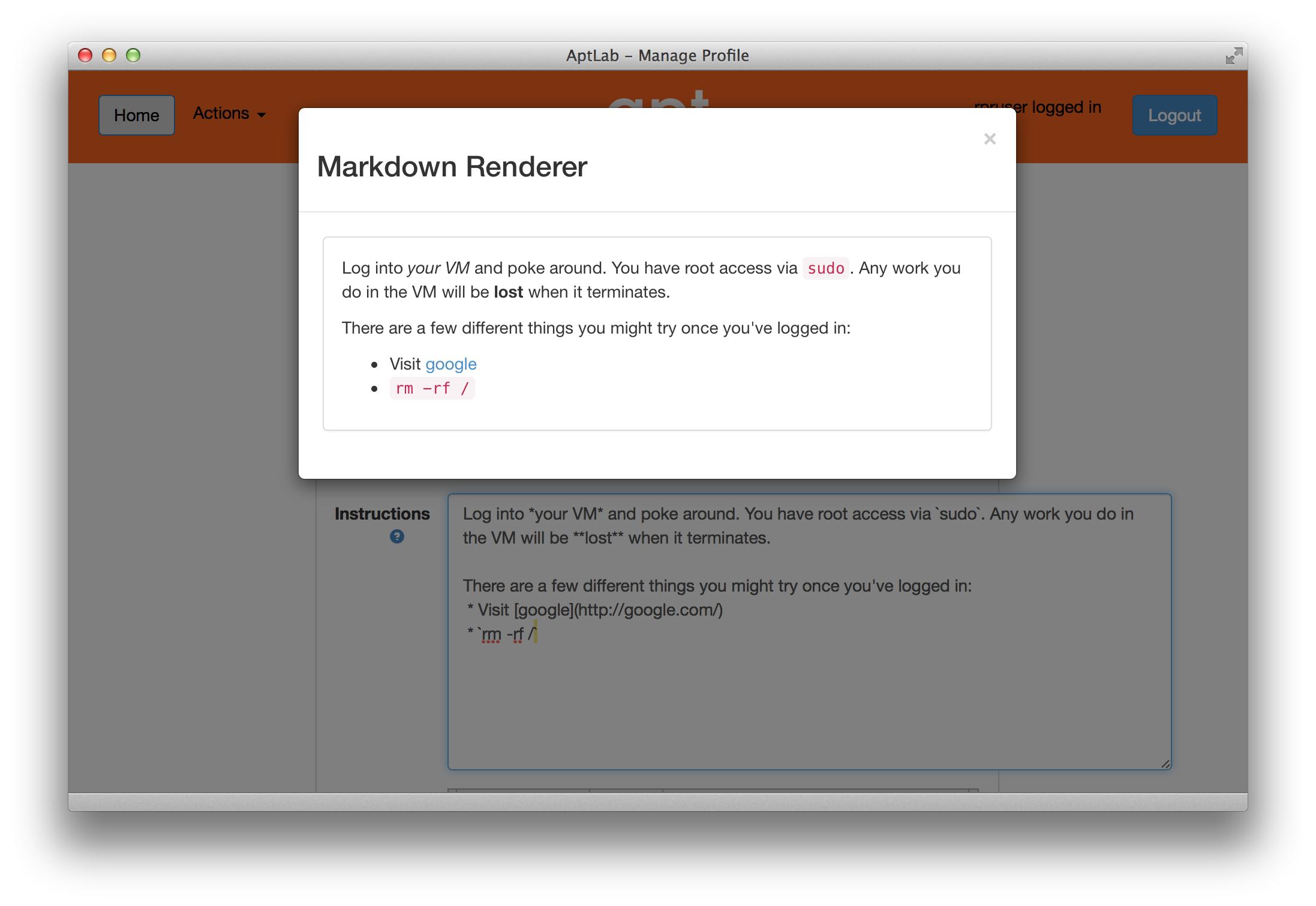

Apt supports Markdown in the major text fields in RSpecs. Markdown is a simple formatting syntax with a straightforward translation to basic HTML elements such as headers, lists, and pre-formatted text. You may find this useful in the description and instructions attached to your profile.

While editing a profile, you can preview the Markdown rendering of the Instructions or Description field by double-clicking within the text box.

You will probably find the Markdown manual to be useful.

7.6 Introspection

Apt implements the GENI APIs, and in particular the geni-get command. geni-get is a generic means for nodes to query their own configuration and metadata, and is pre-installed on all facility-provided disk images. (If you are supplying your own disk image built from scratch, you can add the geni-get client from its repository.)

While geni-get supports many options, there are five commands most useful in the Apt context.

7.6.1 Client ID

Invoking geni-get client_id will print a single line to standard output showing the identifier specified in the profile corresponding to the node on which it is run. This is a particularly useful feature in execute services, where a script might want to vary its behaviour between different nodes.

7.6.2 Control MAC

The command geni-get control_mac will print the MAC address of the control interface (as a string of 12 hexadecimal digits with no punctuation). In some circumstances this can be a useful means to determine which interface is attached to the control network, as OSes are not necessarily consistent in assigning identifiers to network interfaces.

7.6.3 Manifest

To retrieve the manifest RSpec for the instance, you can use the command geni-get manifest. It will print the manifest to standard output, including any annotations added during instantiation. For instance, this is an appropriate technique to use to query the allocation of a dynamic public IP address pool.

7.6.4 Private key

As a convenience, Apt will automatically generate an RSA private key unique to each profile instance. geni-get key will retrieve the private half of the keypair, which makes it a useful command for profiles bootstraping an authenticated channel. For instance:

#!/bin/sh # Create the user SSH directory, just in case. mkdir $HOME/.ssh && chmod 700 $HOME/.ssh # Retrieve the server-generated RSA private key. geni-get key > $HOME/.ssh/id_rsa chmod 600 $HOME/.ssh/id_rsa # Derive the corresponding public key portion. ssh-keygen -y -f $HOME/.ssh/id_rsa > $HOME/.ssh/id_rsa.pub # If you want to permit login authenticated by the auto-generated key, # then append the public half to the authorized_keys file: grep -q -f $HOME/.ssh/id_rsa.pub $HOME/.ssh/authorized_keys || cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys #!/bin/sh # Create the user SSH directory, just in case. mkdir $HOME/.ssh && chmod 700 $HOME/.ssh # Retrieve the server-generated RSA private key. geni-get key > $HOME/.ssh/id_rsa chmod 600 $HOME/.ssh/id_rsa # Derive the corresponding public key portion. ssh-keygen -y -f $HOME/.ssh/id_rsa > $HOME/.ssh/id_rsa.pub # If you want to permit login authenticated by the auto-generated key, # then append the public half to the authorized_keys file: grep -q -f $HOME/.ssh/id_rsa.pub $HOME/.ssh/authorized_keys || cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys

Please note that the private key will be accessible to any user who can invoke geni-get from within the profile instance. Therefore, it is NOT suitable for an authentication mechanism for privilege within a multi-user instance!

7.6.5 Profile parameters

When executing within the context of a profile instantiated with user-specified parameters, geni-get allows the retrieval of any of those parameters. The proper syntax is geni-get "param name", where name is the parameter name as specified in the geni-lib script defineParameter call. For example, geni-get "param n" would retrieve the number of nodes in an instance of the profile shown in the geni-lib parameter section.

7.7 User-controlled switches and layer-1 topologies

Some experiments require exclusive access to Ethernet switches and/or the ability for users to reconfigure those switches. One example of a good use case for this feature is to enable or tune QoS features that cannot be enabled on Apt’s shared infrastructure switches.

User-allocated switches are treated similarly to the way Apt treats servers: the switches appear as nodes in your topology, and you ’wire’ them to PCs and each other using point-to-point layer-1 links. When one of these switches is allocated to an experiment, that experiment is the exclusive user, just as it is for a raw PC, and the user has ssh access with full administrative control. This means that users are free to enable and disable features, tweak parameters, reconfigure as will, etc. Users are be given the switches in a ’clean’ state (we do little configuration on them), and can reload and reboot them like you would do with a server.

The list of available switches is found in our hardware chapter, and the following example shows how to request a simple topology using geni-lib.

"""This profile allocates two bare metal nodes and connects them together via a Dell or Mellanox switch with layer1 links. Instructions: Click on any node in the topology and choose the `shell` menu item. When your shell window appears, use `ping` to test the link. You will be able to ping the other node through the switch fabric. We have installed a minimal configuration on your switches that enables the ports that are in use, and turns on spanning-tree (RSTP) in case you inadvertently created a loop with your topology. All unused ports are disabled. The ports are in Vlan 1, which effectively gives a single broadcast domain. If you want anything fancier, you will need to open up a shell window to your switches and configure them yourself. If your topology has more then a single switch, and you have links between your switches, we will enable those ports too, but we do not put them into switchport mode or bond them into a single channel, you will need to do that yourself. If you make any changes to the switch configuration, be sure to write those changes to memory. We will wipe the switches clean and restore a default configuration when your experiment ends.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the Emulab specific extensions. import geni.rspec.emulab as emulab # Create a portal context. pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() pc.defineParameter("phystype", "Switch type", portal.ParameterType.STRING, "dell-s4048", [('mlnx-sn2410', 'Mellanox SN2410'), ('dell-s4048', 'Dell S4048')]) # Retrieve the values the user specifies during instantiation. params = pc.bindParameters() # Do not run snmpit #request.skipVlans() # Add a raw PC to the request and give it an interface. node1 = request.RawPC("node1") iface1 = node1.addInterface() # Specify the IPv4 address iface1.addAddress(pg.IPv4Address("192.168.1.1", "255.255.255.0")) # Add Switch to the request and give it a couple of interfaces mysw = request.Switch("mysw"); mysw.hardware_type = params.phystype swiface1 = mysw.addInterface() swiface2 = mysw.addInterface() # Add another raw PC to the request and give it an interface. node2 = request.RawPC("node2") iface2 = node2.addInterface() # Specify the IPv4 address iface2.addAddress(pg.IPv4Address("192.168.1.2", "255.255.255.0")) # Add L1 link from node1 to mysw link1 = request.L1Link("link1") link1.addInterface(iface1) link1.addInterface(swiface1) # Add L1 link from node2 to mysw link2 = request.L1Link("link2") link2.addInterface(iface2) link2.addInterface(swiface2) # Print the RSpec to the enclosing page. pc.printRequestRSpec(request) Open this profile on Apt"""This profile allocates two bare metal nodes and connects them together via a Dell or Mellanox switch with layer1 links. Instructions: Click on any node in the topology and choose the `shell` menu item. When your shell window appears, use `ping` to test the link. You will be able to ping the other node through the switch fabric. We have installed a minimal configuration on your switches that enables the ports that are in use, and turns on spanning-tree (RSTP) in case you inadvertently created a loop with your topology. All unused ports are disabled. The ports are in Vlan 1, which effectively gives a single broadcast domain. If you want anything fancier, you will need to open up a shell window to your switches and configure them yourself. If your topology has more then a single switch, and you have links between your switches, we will enable those ports too, but we do not put them into switchport mode or bond them into a single channel, you will need to do that yourself. If you make any changes to the switch configuration, be sure to write those changes to memory. We will wipe the switches clean and restore a default configuration when your experiment ends.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the Emulab specific extensions. import geni.rspec.emulab as emulab # Create a portal context. pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() pc.defineParameter("phystype", "Switch type", portal.ParameterType.STRING, "dell-s4048", [('mlnx-sn2410', 'Mellanox SN2410'), ('dell-s4048', 'Dell S4048')]) # Retrieve the values the user specifies during instantiation. params = pc.bindParameters() # Do not run snmpit #request.skipVlans() # Add a raw PC to the request and give it an interface. node1 = request.RawPC("node1") iface1 = node1.addInterface() # Specify the IPv4 address iface1.addAddress(pg.IPv4Address("192.168.1.1", "255.255.255.0")) # Add Switch to the request and give it a couple of interfaces mysw = request.Switch("mysw"); mysw.hardware_type = params.phystype swiface1 = mysw.addInterface() swiface2 = mysw.addInterface() # Add another raw PC to the request and give it an interface. node2 = request.RawPC("node2") iface2 = node2.addInterface() # Specify the IPv4 address iface2.addAddress(pg.IPv4Address("192.168.1.2", "255.255.255.0")) # Add L1 link from node1 to mysw link1 = request.L1Link("link1") link1.addInterface(iface1) link1.addInterface(swiface1) # Add L1 link from node2 to mysw link2 = request.L1Link("link2") link2.addInterface(iface2) link2.addInterface(swiface2) # Print the RSpec to the enclosing page. pc.printRequestRSpec(request)

This feature is implemented using a set of layer-1 switches between some servers and Ethernet switches. These switches act as “patch panels,” allowing us to “wire” servers to switches with no intervening Ethernet packet processing and minimal impact on latency. This feature can also be used to “wire” servers directly to one another, and to create links between switches, as seen in the following two examples.

"""This profile allocates two bare metal nodes and connects them directly together via a layer1 link. Instructions: Click on any node in the topology and choose the `shell` menu item. When your shell window appears, use `ping` to test the link.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the Emulab specific extensions. import geni.rspec.emulab as emulab # Create a portal context. pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() # Do not run snmpit #request.skipVlans() # Add a raw PC to the request and give it an interface. node1 = request.RawPC("node1") # Must use UBUNTU18 to utilize layer 1 links. node1.disk_image = "urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU18-64-STD" iface1 = node1.addInterface() # Specify the IPv4 address iface1.addAddress(pg.IPv4Address("192.168.1.1", "255.255.255.0")) # Add another raw PC to the request and give it an interface. node2 = request.RawPC("node2") # Must use UBUNTU18 to utilize layer 1 links. node2.disk_image = "urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU18-64-STD" iface2 = node2.addInterface() # Specify the IPv4 address iface2.addAddress(pg.IPv4Address("192.168.1.2", "255.255.255.0")) # Add L1 link from node1 to node2 link1 = request.L1Link("link1") link1.addInterface(iface1) link1.addInterface(iface2) # Print the RSpec to the enclosing page. pc.printRequestRSpec(request) Open this profile on Apt"""This profile allocates two bare metal nodes and connects them directly together via a layer1 link. Instructions: Click on any node in the topology and choose the `shell` menu item. When your shell window appears, use `ping` to test the link.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the Emulab specific extensions. import geni.rspec.emulab as emulab # Create a portal context. pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() # Do not run snmpit #request.skipVlans() # Add a raw PC to the request and give it an interface. node1 = request.RawPC("node1") # Must use UBUNTU18 to utilize layer 1 links. node1.disk_image = "urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU18-64-STD" iface1 = node1.addInterface() # Specify the IPv4 address iface1.addAddress(pg.IPv4Address("192.168.1.1", "255.255.255.0")) # Add another raw PC to the request and give it an interface. node2 = request.RawPC("node2") # Must use UBUNTU18 to utilize layer 1 links. node2.disk_image = "urn:publicid:IDN+emulab.net+image+emulab-ops//UBUNTU18-64-STD" iface2 = node2.addInterface() # Specify the IPv4 address iface2.addAddress(pg.IPv4Address("192.168.1.2", "255.255.255.0")) # Add L1 link from node1 to node2 link1 = request.L1Link("link1") link1.addInterface(iface1) link1.addInterface(iface2) # Print the RSpec to the enclosing page. pc.printRequestRSpec(request)

"""This profile allocates two bare metal nodes and connects them together via two Dell or Mellanox switches with layer1 links. Instructions: Click on any node in the topology and choose the `shell` menu item. When your shell window appears, use `ping` to test the link. You will be able to ping the other node through the switch fabric. We have installed a minimal configuration on your switches that enables the ports that are in use, and turns on spanning-tree (RSTP) in case you inadvertently created a loop with your topology. All unused ports are disabled. The ports are in Vlan 1, which effectively gives a single broadcast domain. If you want anything fancier, you will need to open up a shell window to your switches and configure them yourself. If your topology has more then a single switch, and you have links between your switches, we will enable those ports too, but we do not put them into switchport mode or bond them into a single channel, you will need to do that yourself. If you make any changes to the switch configuration, be sure to write those changes to memory. We will wipe the switches clean and restore a default configuration when your experiment ends.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the Emulab specific extensions. import geni.rspec.emulab as emulab # Create a portal context. pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() pc.defineParameter("phystype1", "Switch 1 type", portal.ParameterType.STRING, "dell-s4048", [('mlnx-sn2410', 'Mellanox SN2410'), ('dell-s4048', 'Dell S4048')]) pc.defineParameter("phystype2", "Switch 2 type", portal.ParameterType.STRING, "dell-s4048", [('mlnx-sn2410', 'Mellanox SN2410'), ('dell-s4048', 'Dell S4048')]) # Retrieve the values the user specifies during instantiation. params = pc.bindParameters() # Do not run snmpit #request.skipVlans() # Add a raw PC to the request and give it an interface. node1 = request.RawPC("node1") iface1 = node1.addInterface() # Specify the IPv4 address iface1.addAddress(pg.IPv4Address("192.168.1.1", "255.255.255.0")) # Add first switch to the request and give it a couple of interfaces mysw1 = request.Switch("mysw1"); mysw1.hardware_type = params.phystype1 sw1iface1 = mysw1.addInterface() sw1iface2 = mysw1.addInterface() # Add second switch to the request and give it a couple of interfaces mysw2 = request.Switch("mysw2"); mysw2.hardware_type = params.phystype2 sw2iface1 = mysw2.addInterface() sw2iface2 = mysw2.addInterface() # Add another raw PC to the request and give it an interface. node2 = request.RawPC("node2") iface2 = node2.addInterface() # Specify the IPv4 address iface2.addAddress(pg.IPv4Address("192.168.1.2", "255.255.255.0")) # Add L1 link from node1 to mysw1 link1 = request.L1Link("link1") link1.addInterface(iface1) link1.addInterface(sw1iface1) # Add L1 link from mysw1 to mysw2 trunk = request.L1Link("trunk") trunk.addInterface(sw1iface2) trunk.addInterface(sw2iface2) # Add L1 link from node2 to mysw2 link2 = request.L1Link("link2") link2.addInterface(iface2) link2.addInterface(sw2iface1) # Print the RSpec to the enclosing page. pc.printRequestRSpec(request) Open this profile on Apt"""This profile allocates two bare metal nodes and connects them together via two Dell or Mellanox switches with layer1 links. Instructions: Click on any node in the topology and choose the `shell` menu item. When your shell window appears, use `ping` to test the link. You will be able to ping the other node through the switch fabric. We have installed a minimal configuration on your switches that enables the ports that are in use, and turns on spanning-tree (RSTP) in case you inadvertently created a loop with your topology. All unused ports are disabled. The ports are in Vlan 1, which effectively gives a single broadcast domain. If you want anything fancier, you will need to open up a shell window to your switches and configure them yourself. If your topology has more then a single switch, and you have links between your switches, we will enable those ports too, but we do not put them into switchport mode or bond them into a single channel, you will need to do that yourself. If you make any changes to the switch configuration, be sure to write those changes to memory. We will wipe the switches clean and restore a default configuration when your experiment ends.""" # Import the Portal object. import geni.portal as portal # Import the ProtoGENI library. import geni.rspec.pg as pg # Import the Emulab specific extensions. import geni.rspec.emulab as emulab # Create a portal context. pc = portal.Context() # Create a Request object to start building the RSpec. request = pc.makeRequestRSpec() pc.defineParameter("phystype1", "Switch 1 type", portal.ParameterType.STRING, "dell-s4048", [('mlnx-sn2410', 'Mellanox SN2410'), ('dell-s4048', 'Dell S4048')]) pc.defineParameter("phystype2", "Switch 2 type", portal.ParameterType.STRING, "dell-s4048", [('mlnx-sn2410', 'Mellanox SN2410'), ('dell-s4048', 'Dell S4048')]) # Retrieve the values the user specifies during instantiation. params = pc.bindParameters() # Do not run snmpit #request.skipVlans() # Add a raw PC to the request and give it an interface. node1 = request.RawPC("node1") iface1 = node1.addInterface() # Specify the IPv4 address iface1.addAddress(pg.IPv4Address("192.168.1.1", "255.255.255.0")) # Add first switch to the request and give it a couple of interfaces mysw1 = request.Switch("mysw1"); mysw1.hardware_type = params.phystype1 sw1iface1 = mysw1.addInterface() sw1iface2 = mysw1.addInterface() # Add second switch to the request and give it a couple of interfaces mysw2 = request.Switch("mysw2"); mysw2.hardware_type = params.phystype2 sw2iface1 = mysw2.addInterface() sw2iface2 = mysw2.addInterface() # Add another raw PC to the request and give it an interface. node2 = request.RawPC("node2") iface2 = node2.addInterface() # Specify the IPv4 address iface2.addAddress(pg.IPv4Address("192.168.1.2", "255.255.255.0")) # Add L1 link from node1 to mysw1 link1 = request.L1Link("link1") link1.addInterface(iface1) link1.addInterface(sw1iface1) # Add L1 link from mysw1 to mysw2 trunk = request.L1Link("trunk") trunk.addInterface(sw1iface2) trunk.addInterface(sw2iface2) # Add L1 link from node2 to mysw2 link2 = request.L1Link("link2") link2.addInterface(iface2) link2.addInterface(sw2iface1) # Print the RSpec to the enclosing page. pc.printRequestRSpec(request)

7.8 Portal API

The Apt portal provides an API that makes it possible to programmatically instantiate, interact with, and terminate experiments. Using this API in combination with shell scripts in a profile, or a tool like pexpect for automating console interactions, can be very useful. For example, the profile at this link shows how one might use these tools to instantiate an experiment based on another profile, use the associated nodes to build and test OAI in a controlled RF environment, and then terminate the experiment. The portal API allows for this kind of orchestration to happen on-demand and without human interaction, e.g., as part of a CI/CD pipeline. More information is available in the profile README.